In 2022, Google ran over 800,000 experiments that resulted in more than 4,000 improvements to Search. Spotify is running thousands of experiments per year across virtually every aspect of the business.

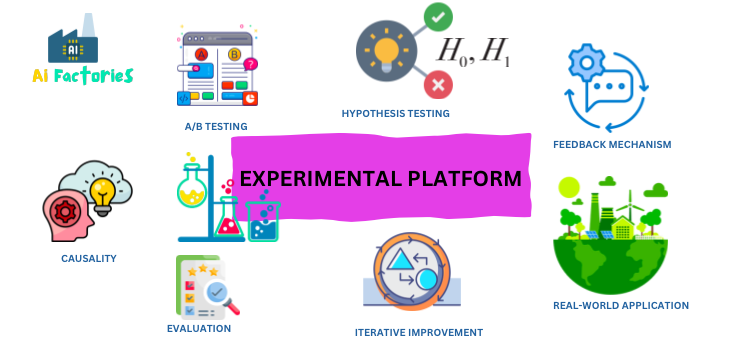

The concept of an “experimental platform” in AI factories is pivotal for testing and refining algorithms before they are deployed in real-world scenarios. This component functions much like a laboratory for AI models, where data scientists and engineers can run controlled experiments to validate their hypotheses about algorithm improvements or new features. Here’s a more detailed breakdown of how an experimental platform operates and its significance in the AI development cycle:

1. Hypothesis Testing

- In an AI context, a hypothesis usually involves assumptions about how changes to an algorithm might enhance its performance or accuracy. For instance, a hypothesis could be that incorporating additional data points or changing the model architecture will improve prediction accuracy.

2. Controlled Experimentation

- The experimental platform allows for the setup of controlled experiments to test these hypotheses. This might involve A/B testing where two versions of the model are run simultaneously with one version receiving the proposed change and the other serving as a control.

- These experiments are carefully designed to isolate the effects of the changes made, ensuring that any observed differences in outcomes can be attributed to the modifications and not to external variables.

3. Causality Confirmation

- One of the key goals of the experimental platform is to ascertain causality, not just correlation. This means ensuring that the changes to the algorithm directly cause improvements in performance, rather than improvements being coincidentally associated with those changes.

- Techniques such as counterfactual reasoning, where the outcomes of decisions are compared against what would have happened without the change, can be employed to assess causality.

4. Rigorous Evaluation

- The platform must provide robust tools for evaluating the results of experiments. This includes statistical methods to analyze outcomes and validate whether the changes have statistically significant positive effects.

- Metrics and performance indicators need to be clearly defined and monitored during the testing phase to evaluate the success of the new or modified algorithms.

5. Iterative Improvement

- Experimental platforms enable iterative improvements. Based on the results of initial tests, algorithms can be tweaked and retested. This iterative process helps in fine-tuning the models until the desired performance levels are achieved.

6. Scalability and Real-World Testing

- After successful experiments, the platform can facilitate scalability tests to evaluate how the algorithm performs under different or more extensive real-world conditions.

- This often involves gradual rollout and monitoring for unforeseen issues that didn’t appear during the controlled tests.

7. Feedback Integration

- An essential feature of the experimental platform is the integration of feedback mechanisms. These mechanisms collect data from the experiments’ outcomes to refine the models further. Feedback can also come from real-world use after partial deployment, which loops back into new hypotheses for future experiments.

By employing an experimental platform, organizations can reduce the risks associated with deploying untested AI models. It ensures that the algorithms not only perform as expected but also contribute positively to decision-making processes in a predictable and verifiable way. This structured approach to development is crucial for maintaining reliability and trust in AI applications across various industries.

Technologies used in The Experimental Platforms

The tools used in an “experimental platform” for AI factories are diverse and cater to various stages of AI model development, testing, and deployment. Here are some key tools and technologies commonly utilized:

1. Development and Frameworks

- TensorFlow and PyTorch: Popular frameworks for building and training machine learning models due to their flexibility, extensive libraries, and community support.

- Scikit-learn: Useful for more traditional machine learning algorithms which are often used in conjunction with neural networks for hybrid approaches.

2. Experiment Management

- MLflow: Helps manage the machine learning lifecycle, including experimentation, reproducibility, and deployment. It tracks experiments to record and compare parameters and results.

- Weights & Biases: Provides tools for tracking experiments, visualizing data, and organizing ML projects to improve reproducibility and collaboration.

3. Data Management and Version Control

- DVC (Data Version Control): Offers version control systems for machine learning projects, enabling you to track datasets, models, and experiments.

- Apache Airflow: Used for orchestrating complex computational workflows and data processing pipelines.

4. Testing and Validation

- TensorBoard: Visualization toolkit for TensorFlow that allows tracking metrics during the training of machine learning models, visualizing models, and showing statistics about the model to aid in debugging.

- Jupyter Notebooks: Interactive computational environment where you can combine code execution, rich text, mathematics, plots, and rich media to test and validate models interactively.

5. Deployment and Scaling

- Kubernetes: Automates the deployment, scaling, and management of containerized applications, crucial for deploying models reliably at scale.

- Docker: Used to create, deploy, and run applications by using containers, ensuring consistency across multiple development and release cycles.

6. Monitoring and Performance Tools

- Prometheus and Grafana: For monitoring deployed models in production, tracking their performance and health.

- ELK Stack (Elasticsearch, Logstash, Kibana): Helps in monitoring logs from multiple sources for comprehensive insights into application performance and behavior.

7. Cloud Platforms

- AWS, Google Cloud Platform, and Microsoft Azure: Provide comprehensive cloud computing services including AI-specific tools (like Google AI Platform, Azure Machine Learning, and AWS SageMaker), vast storage options, and powerful computing capabilities to deploy and scale AI models.

8. Simulators and Virtual Environments

- SimPy or OpenAI Gym: Provides frameworks for simulating real-world scenarios and testing how models perform under various conditions.

These tools form an integrated ecosystem that supports the rigorous demands of AI experimental platforms, helping teams develop, test, and deploy AI models efficiently and effectively. They ensure that the solutions are robust, scalable, and perform well under different operational conditions.

Machine Learning Experiment Tracking tools

Machine Learning (ML) experiment tracking is crucial for managing the lifecycle of machine learning projects, especially when dealing with complex models and datasets. These tools help data scientists and developers track experiments, manage models, log parameters, and analyze results systematically.

Here are some of the key tools used for ML experiment tracking:

1. MLflow

- Description: MLflow is an open-source platform primarily used for managing the end-to-end machine learning lifecycle. It includes features for tracking experiments, packaging code into reproducible runs, and sharing and deploying models.

- Key Features: Parameter and metric logging, model versioning, and a centralized model registry for collaboration.

2. Weights & Biases (W&B)

- Description: Weights & Biases offers tools for experiment tracking, model optimization, and dataset versioning in a very user-friendly environment. It is highly interactive and integrates easily with other frameworks.

- Key Features: Real-time monitoring of training processes, visualizations of models and results, integration with many ML frameworks.

3. Comet.ml

- Description: Comet.ml provides a self-hosted and cloud-based meta machine learning platform allowing data scientists and teams to track, compare, explain and optimize experiments and models.

- Key Features: Code version control, hyperparameter optimization, integration with Jupyter Notebooks, and detailed comparison across experiments.

4. TensorBoard

- Description: TensorBoard is a visualization toolkit for TensorFlow that allows you to visualize various aspects of machine learning training. It helps understand model training runs, and view metrics like loss and accuracy.

- Key Features: Visualize metrics during training, plot quantitative metrics over time, display model architecture, view histograms of weights, biases, or other tensors as they change over time.

5. Sacred

- Description: Sacred is a tool to help configure, organize, log, and reproduce experiments developed by machine learning researchers. It is designed with a focus on simplicity and adaptability, and it integrates with other Python-based tools.

- Key Features: Automatic and manual configuration saving, command line integration for parameter updates, and output capturing.

6. ClearML

- Description: ClearML (formerly known as Allegro Trains) is an open-source tool that automates and orchestrates machine learning experiments. It is designed to track, manage, and reproduce experiments and streamline model deployment.

- Key Features: Automatic experiment tracking, hardware monitoring, and integration with popular data science tools and frameworks.

7. Guild AI

- Description: Guild AI is an open-source tool that helps track ML experiments without requiring code changes. It focuses on simplicity and flexibility.

- Key Features: Automatic capture of experiment details, including code changes, dependencies, and system metrics; no need to modify code to start tracking.

8. Aim

- Description: Aim is an open-source, self-hosted ML experiment tracking tool designed specifically for deep learning. It is built to be lightweight and flexible.

- Key Features: Scalable and detailed tracking of metrics, parameters, and artifacts; seamless GitHub integration for collaboration.

These tools vary in terms of complexity and features offered, but all provide crucial capabilities that help improve the efficiency and reproducibility of machine learning experiments. They are essential for teams looking to streamline their machine learning workflows and ensure that insights and optimizations are accurately recorded and assessed.

Other Episodes:

AI Factories: Episode 1 – So, what exactly is “AI Factories” or “AI Factory”?

Factories: Episode 2 – Virtuous Cycle in AI Factories

Factories: Episode 3 – The Components of AI Factories

AI Factories: Episode 4 – Data Pipelines

AI Factories: Episode 5 – Algorithm Development

#AI #MachineLearning #ML #ArtificialIntelligence #DataScience #AITesting #MLModels #AIBestPractices #ExperimentalPlatform #ABTesting #TechInnovation #AIFactory #DataAnalytics #AlgorithmDevelopment #TechTrends #AIFactories