Algorithm development in AI factories is a critical, iterative process focused on creating and refining algorithms that are central to a digital firm’s operations. These algorithms enable the firm to make data-driven decisions, predict future trends, and adapt to changing market conditions efficiently. Here’s an in-depth look at how this process is typically structured, the stages involved, tools used, and other key factors.

1. Phases of Algorithm Development in AI Factories

Stage 1: Problem Identification and Definition

- Purpose: Understand and define the business problem that needs a solution.

- Activities: Engaging with stakeholders to gather requirements, defining the scope of the problem, and setting clear objectives for what the algorithm needs to achieve.

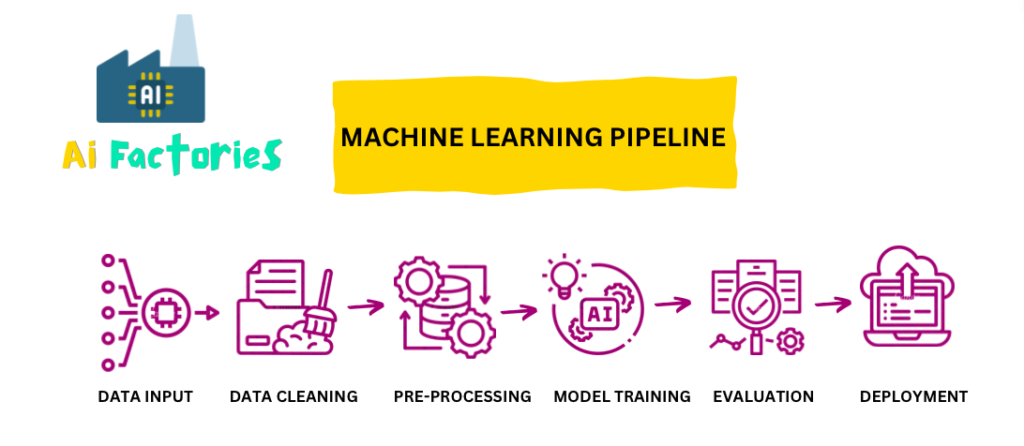

Stage 2: Data Collection and Preparation

- Purpose: Gather and prepare the data required to develop and train the algorithm.

- Activities: Collecting data from various sources (internal and external), cleaning data to remove inaccuracies or inconsistencies, and transforming data into a usable format. This stage may also involve data augmentation to enhance model performance.

Stage 3: Algorithm Design and Training

- Purpose: Design the algorithm based on the requirements and data.

- Activities: Selecting appropriate modeling techniques (e.g., regression, classification, clustering) and designing the initial algorithmic structure. This includes defining how the algorithm will process data to produce outputs.

Stage 4: Development and Prototyping

- Purpose: Develop a working model of the algorithm to test its effectiveness.

- Activities: Writing code to implement the algorithm, typically using programming languages like Python, R, or Java. This stage may use various libraries and frameworks to facilitate development.

Stage 5: Testing and Validation

- Purpose: Ensure the algorithm works as expected and meets predefined criteria.

- Activities: Testing the algorithm on different datasets to evaluate its performance, using metrics like accuracy, precision, recall, and F1 score for evaluation. This stage helps in identifying any biases, errors, or underperformance issues.

Stage 6: Deployment

- Purpose: Deploy the algorithm into a production environment where it can start providing value.

- Activities: Integrating the algorithm with existing business systems, setting up a deployment environment (on-premises or cloud), and ensuring that the algorithm can handle real-time data and user requests efficiently.

Stage 7: Monitoring and Updating

- Purpose: Continuously improve the algorithm based on its performance and changing conditions.

- Activities: Monitoring the algorithm’s performance over time, collecting feedback, and making necessary adjustments or updates. This stage is crucial for adapting to new data and evolving market conditions, ensuring the algorithm remains effective and relevant.

2. Tools Used in Algorithm Development

- Programming Languages: Python, R, Java, and Scala are commonly used due to their powerful libraries and frameworks.

- Frameworks and Libraries: TensorFlow, PyTorch, Scikit-learn, and Pandas for machine learning; Keras for deep learning; and Apache Spark for big data processing.

- Development Environments: Jupyter Notebook, RStudio, and integrated development environments (IDEs) like PyCharm and Visual Studio.

- Data Storage and Management: SQL databases, NoSQL databases like MongoDB, and big data platforms like Hadoop and Cassandra.

- Deployment Tools: Docker for containerization, Kubernetes for orchestration, and cloud platforms like AWS, Google Cloud, and Azure for scalable deployment.

3. Additional Considerations

- Ethics and Bias: Ensuring algorithms are ethically designed and free of biases is crucial. This involves techniques for bias detection and mitigation.

- Security: Implementing security measures to protect data and algorithms, especially when deployed in sensitive or critical environments.

- Compliance: Adhering to regulations like GDPR for data privacy and other industry-specific standards.

AI factories streamline these stages into a cohesive, continuous pipeline, enhancing the agility and efficiency of algorithm development, enabling firms to rapidly adapt and innovate based on actionable AI-driven insights.

Other Episodes:

AI Factories: Episode 1 – So, what exactly is “AI Factories” or “AI Factory”?

Factories: Episode 2 – Virtuous Cycle in AI Factories

Factories: Episode 3 – The Components of AI Factories

AI Factories: Episode 4 – Data Pipelines

👍 Like | 💬 Comment | 🔗 Share

#AIFactories #AlgorithmDevelopment #DataScience #MachineLearning #AI #ArtificialIntelligence #DeepLearning #TechInnovation #BigData #AIIntegration #CloudComputing #DevOps #DataAnalytics #PredictiveAnalytics #EthicalAI #TechTrends #DigitalTransformation #BusinessAutomation #AIinBusiness #SmartTechnology #FutureofAI